Projects and Theses

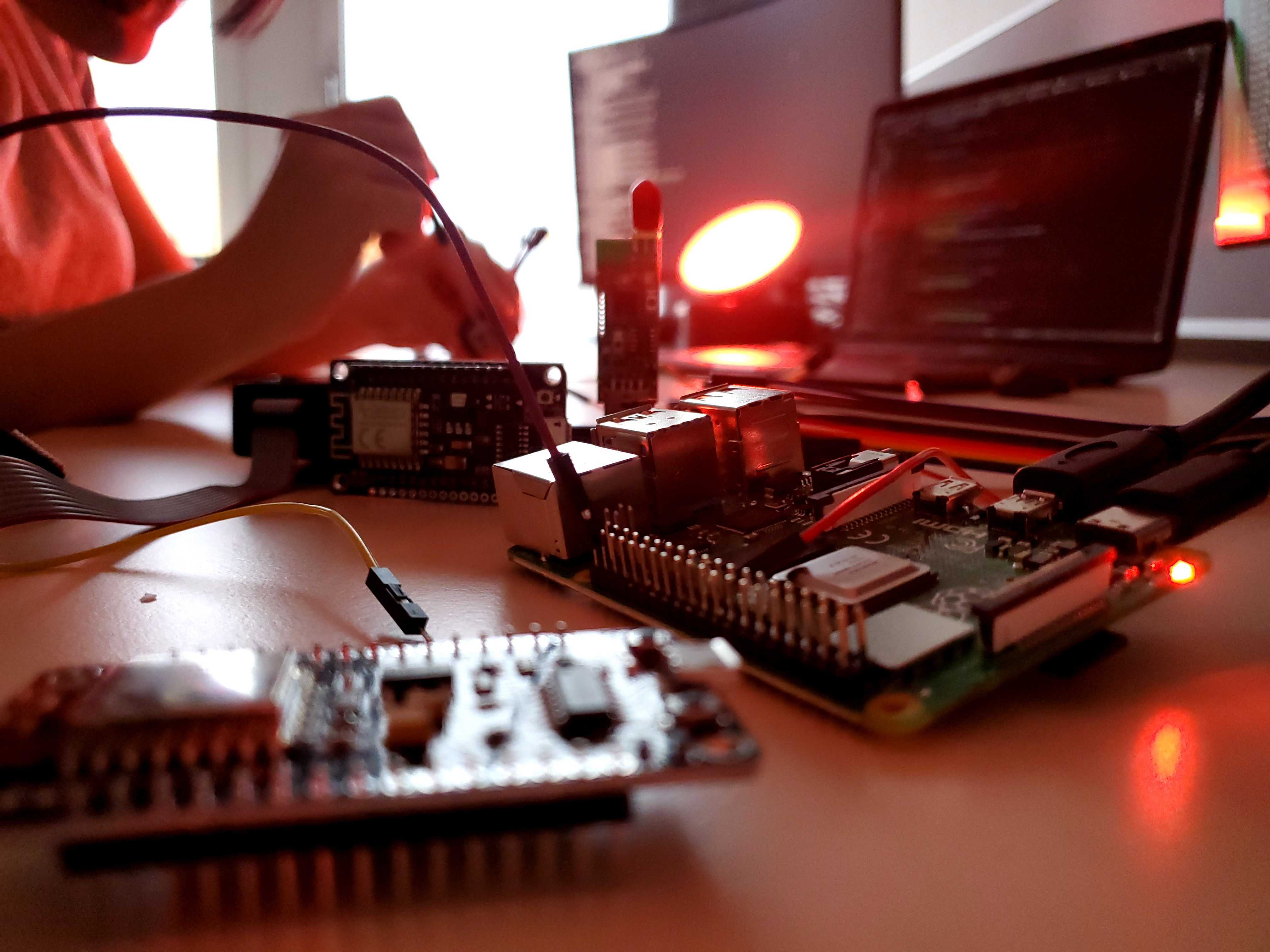

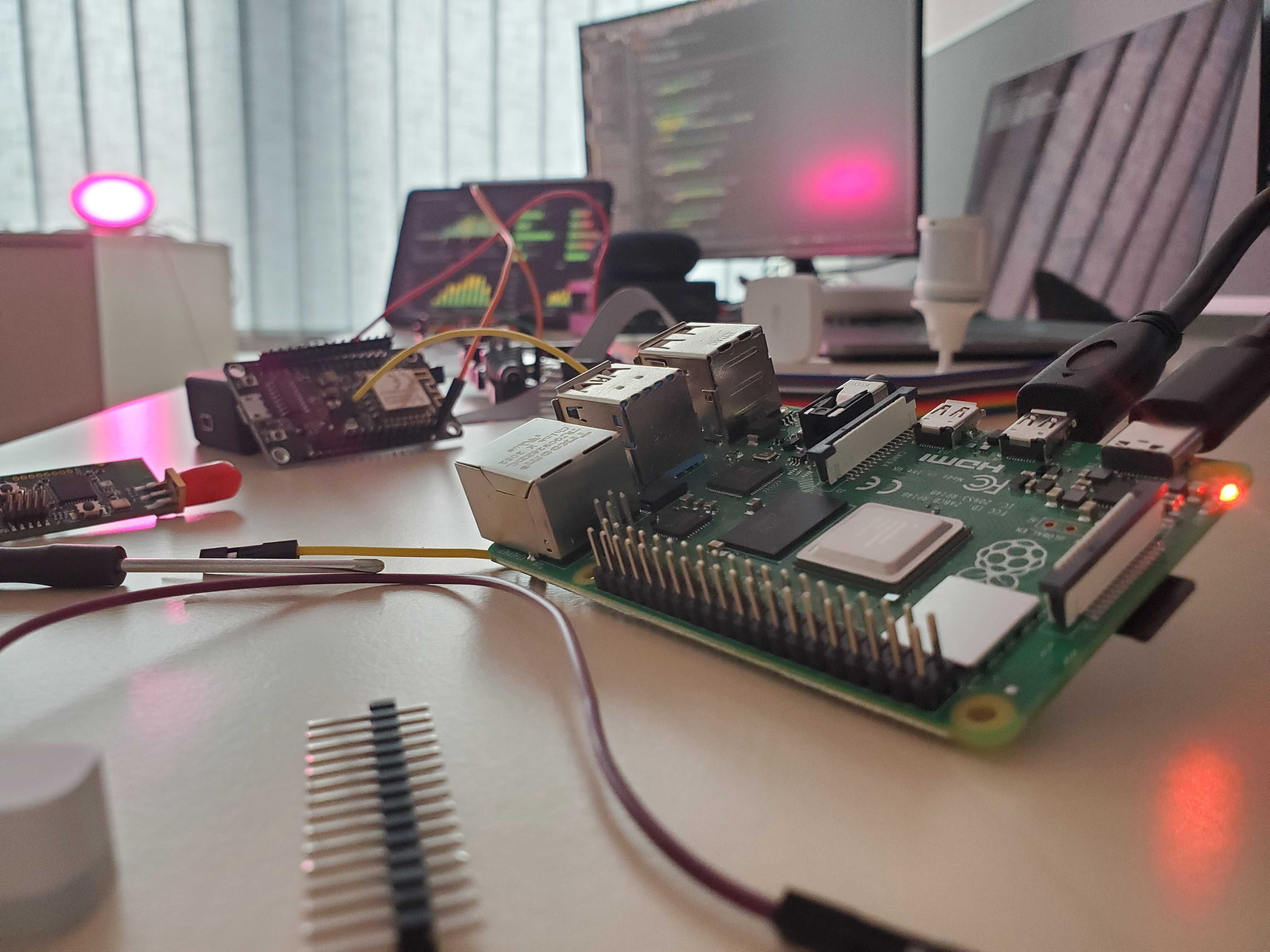

SmartEx: An Explainability Engine for Smart Homes

SmartEx adds an explainable layer to automated smart home environments. It helps users understand the reasoning behind system behaviors through context-aware, user-centered explanations. Built on top of Home Assistant, it supports a wide range of smart devices and includes a reference set of explanation cases.

[View Poster], [Read the Paper]

Generating Context-Aware Contrastive Explanations

B.Sc. Thesis by Lars Herbold

Explores the generation of human-like, contrastive explanations in smart home environments. Instead of answering general “Why?” questions, it aims to answer “Why this, rather than that?”, reflecting how users typically reason about system behavior.

The approach predicts what the user might have expected to happen and constructs explanations based on this contrast. It is implemented as a plugin for Home Assistant and evaluated across four realistic smart home scenarios.

Counterfactual Explanations in Rule-Based Smart Environments

M.Sc. Thesis by Anna Trapp

Introduces a framework for generating counterfactual explanations in smart environments. These explanations focus on what could have happened differently and offer users actionable insights for understanding and improving system behavior.

The project formally defines counterfactual explanations for rule-based systems and evaluates their potential to enhance user understanding, trust, and control. It includes a fully implemented prototype and a user-centric evaluation, addressing an important open challenge in the field.

Explaining the Unexplainable: The Impact of Misleading Explanations on Trust in Unreliable Predictions for Hardly Assessable Tasks

B.Sc. Thesis by Daniel Pöttgen

To increase trust in systems, engineers strive to create explanations that are as accurate as possible. However, when system accuracy is compromised, these explanations may become misleading—especially when users are unable to independently judge the correctness of the system’s output.

This project investigates the impact of such misleading explanations through an online survey with 162 participants. The study found that users exposed to misleading explanations rated their trust significantly higher and were more likely to align with the system’s prediction, despite its unreliability.

These findings highlight the importance of caution when generating explanations for hardly assessable tasks and call attention to the ethical risks of explanation design in AI systems.

Surprise-Based Detection of Explanation Needs in Smart Environments

M.Sc. Thesis by Marius Schäfer

This project investigates how surprise can serve as a quantitative indicator of when users desire explanations in intelligent systems. While much research in explainability focuses on how to generate good explanations, this thesis explores when explanations are needed at all.

The core idea is that actions which strongly violate user expectations produce a measurable level of surprise. The thesis examines and adapts existing mathematical models to quantify surprise, defines threshold levels for triggering explanations, and evaluates the approach within the context of smart environments.

Explanation Cases: A Taxonomy for Explainable Software Systems

This project introduces a taxonomy that categorizes different reasons why users might need explanations in interactive intelligent systems. It highlights that mismatches between user expectations and system behavior can lead to explanation needs, which may result from errors, goal conflicts, or interference from other agents.

Ongoing Theses

Towards Objective Surprise Detection – Evaluating Mathematical Models with Physiological Indicators

- Imke Schwenke (M.Sc. Thesis)

From Issues to Insights: RAG-based Explanation Generation from Software Engineering Artifacts

- Daniel Pöttgen (M.Sc. Thesis)